NEURAL NETWORKS FOR 3D MOTION DETECTION FROM A SEQUENCE OF IMAGE FRAMES

In video surveillance, video signals from multiple remote locations are displayed on several TV screens which are typically placed together in a control room. In the so-called third generation surveillance systems 3GSS, all the parts of the surveillance systems will be digital and consequently, digital video will be transmitted and processed . Additionally in 3GSS some intelligence has to be introduced to detect relevant events in the video signals in an automatic way. This allows filtering of the irrelevant time segments of the video sequences and the displaying on the TV screen only those segments that require the attention of the surveillance operator. Motion detection is a basic operation in the selection of significant segments of the video signals.

Once motion has been detected, other features can be considered to decide whether a video signal has to be presented to the surveillance operator. If the motion detection is performed after the transmission of the video signals from the cameras to the control room then all the bit streams have to be previously decompressed this can be a very demanding operation especially .If there are many cameras in the surveillance system. For this reason, it is interesting to consider the use of motion detection algorithms operating in the compressed transform domain.

In this thesis we present a motion detection algorithm in the compressed domain with a low computational cost. In the following Section, we assume that video is compressed by using motion JPEG MJPEG, each frame is individually JPEG compressed.

Motion detection from a moving observer has been a very important technique for computer vision applications. Especially in recent years, for autonomous driving systems and driver supporting systems. Vision-based navigation method has received more and more attention world wide.

One of its most important tasks is to detect the moving obstacles like cars, bicycles or even pedestrians while the vehicle itself is running in a high speed. Methods of image differencing with the clear background or between adjacent frames are well used for the motion detection. But when the observer is also moving, which leads to the result of continuously changing background scene in the perspective projection image, it becomes more difficult to detect the real moving objects by differencing methods. To deal with this problem, many approaches have been proposed in recent years.

Previous work in this area has been mainly in two categories: 1) Using the difference of optical flow vectors between background and the moving objects, 2) calibrating the background displacement by using camera’s 3D motion analysis result. Methods developed in calculate the optical flow and estimate the flow vector’s reliability between adjacent frames. The major flow vector, which represents the motion of background, can be used to classify and extract the flow vectors of the real moving objects. However, by reason of its huge calculation cost and its difficulty for determining the accurate flow vectors, it is still unavailable for real applications. To analysis the camera’s 3D motion and calibrate the background is another main method for moving objects detection.

For on-board camera’s motion analysis, many motion-detecting algorithms have been proposed which always expend on the previous recognition results like road lane-marks and horizon disappointing. These Methods show some good performance in accuracy and efficiency because of their detailed analysis of road structure and measured vehicle locomotion, which is, however, computationally expensive and over-depended upon road features like lane-marks, and therefore lead to unsatisfied result when lane mark is covered by other vehicles or not exist at all. Compare with these previous works, a new method of moving objects detection from an on-board camera is presented in this paper. To deal with the background-change problem, our method uses camera’s 3D motion analysis results to calibrate the background scene.

With pure points matching and the introduction of camera’s Focus of Expansion FOE, our method is able to determine camera’s rotation and translation parameters theoretically by using only three pairs of matching points between adjacent frames, which make it faster and more efficient for real-time applications. In section 2, we will interpret the camera’s 3D-motion analysis with the introduction of FOE. Then, the detailed image processing methods for moving objects detection are proposed in section 3 and section 4 which include the corner extraction method and a fast matching algorithm. In section 5 experimental results on real outdoor road image sequence will show the effectiveness and precision of our approach.

1.2 OBJECTIVE

One goal has been to compile an introduction to the motion detection algorithms. There exist a number of studies but complete reference on real time motion detection is not as common .we have collected materials from journals, papers and conferences and proposed an approach that can be best to implement real time motion detection.

Another goal has been to search for algorithms that can be used to implement the most demanding components of an audio encoding and watermarking. A third goal is to evaluate their performance with regard to motion detected. These properties were chosen because they have the greatest impact on the implementation effort.

A final goal has been to design and implement an algorithm. This should be done in high level language or mat lab. The source code should be easy to understand so that it can serve as a reference on the standard for designers that need to implement real time motion detection.

Main Program Flow Chart

The main task of the software was to read the still images recorded from the camera and then process these images to detect motions and take necessary actions accordingly. below shows the general flow chart of the main program.

It starts with general initialization of software parameters and objects setup. Then, once the program started the flag value which indicates whether the stop button was pressed or not is checked.

If the stop button was not pressed it start reading the images then process them using one of the two algorithms as the operator was selected. If a motion is detected it starts a series of actions and then it go back to read the next images, otherwise it goes directly to read the next images. Whenever the stop button is pressed the flag value will be set to zero and the program is stopped, memory is cleared and necessary results are recorded. This terminates the program and returns the control for the operator to collect the results.

FIGURE 4.7.1 Main Program Flow Diagram

4.7.2 Setup and Initializations

Figure 4.7.2 Setup and Initializations process

Figure 4.7.2 Show the flow chart for the setup and initialization process. This process includes the launch of the graphical user interface (GUI) where the type of motion detection algorithm is selected and threshold value (the amount of sensitivity of the detection) is being initialized. Also, during this stage a setup process for both the serial port and the video object is done.

This process takes approximately 15 seconds to be completed,(depending on the specifications of the PC used) for the serial port it starts by selecting a communication port and reserving the memory addresses for that port, then the PC connect to the device using the communication setting that was mentioned in the previous chapter. The video object is part of the image acquisition process but it should be setup at the start of the program.

4.7.3 Image acquisition

Figure 4.7.3Image acquisitions Process

After setup stage the image acquisition starts as shown in figure 4.7.3 above. This process reads images from the PC camera and save them in a format suitable for the motion detection algorithm. There were three possible options from which one is implemented. The first option was by using auto snapshots software that takes images automatically and save them on a hard disk as JPEG format, and then another program reads these images in the same sequence as they were saved.

It was found that the maximum speed that can be attained by this software is one frame per second and this limits the speed of detection. Also, synchronization was required between both image processing and the auto snapshot software’s where next images need to be available on the hard disk before processing them .The second option was to display live video on the screen and then start capturing the images from the screen. This is a faster option from the previous approach but again it faced the problem of synchronization, when the computer monitor goes into a power saving mode where black images are produced all the time during the period of the black screen.

The third option was by using the image acquisition toolbox provided in MATLAB 7.5.1 or higher versions. The image acquisition toolbox is a collection of functions that extend the capability of MATLAB. The toolbox supports a wide range of image acquisition operations, including acquiring images through many types of image acquisition devices, such as frame grabbers and USB PC cameras, also viewing a preview of the live video displayed on monitor and reading the image data into the MATLAB workspace directly.

For this project video input function was used to initialize a video object that connects to the PC camera directly. Then preview function was used to display live video on the monitor. get snapshot function was used to read images from the camera and place them in MATLAB workspace.

The later approach was implemented because it has many advantages over the others. It achieved the fastest capturing speed at a rate of five frames per seconds depending on algorithm complexity and PC processor speed. Furthermore, the problem of synchronization was solved because both capturing and processing of images were done using the same software.

All read images were converted it into a two dimensional monochrome images. This is because equations in other algorithms in the system were designed with such image format.

4.7.4 Motion Detection Algorithm

A motion detection algorithm was applied on the previously read images. There were two approaches to implement motion detection algorithm. The first one was by using the two dimensional cross correlation while the second one was by using the sum of absolute difference algorithm. These are explained in details in the next two sub sections.

4.7.4.1 Motion Detection Using Cross Correlation

First the two images were sub divided into four equal parts each. This was done to increase the sensitivity of calculation where it is easier to notice the difference between part of image rather than a whole one. A two dimensional cross correlation was calculated between each sub image with its corresponding part in the other image.

This process produces four values ranging from -1 to 1 depending on the difference of the two correlated images. Because the goal of this division was to achieve more sensitivity the minimum value of correlation will be used as reference to the threshold. In normal cases, motion can easily be detected when the measured minimum cross correlation value is used to set the threshold. However, detection fails when images contain global variations such as illuminations changes or when camera moves.

Figure above shows a test case that contains consecutive illumination level changes by switching the light on and off. During the time where the lights are on (frames 1-50 and frames 100-145) the correlation value is around 0.998 and when the lights are switched off (frames 51-99 and frames 146-190) the correlation value is around 0.47. If the threshold for detection was fixed around the value of 0.95 it will continuously detect motion during the light off period.

To overcome this problem continuous re-estimation of threshold value was required. This can be done by using an adaptive filter but it is not easy to design. Another solution is to look at the variance of the set of data produced from the cross correlation process, and detect motion from it. This method solved the problem of changing illumination and camera movements.

The variance signal calculated from the same set of images of figure . It can be seen that the need for continuously re-estimate the threshold value is eliminated. Choosing a threshold of 1*10-2 will detect the times when only the lights are switched on and off. This results into a robust motion detection algorithm with high sensitivity of detection.

4.7.4.2 Motion Detection Using Sum of Absolute Difference (SAD)

This algorithm is based on image differencing techniques.

A large change in the scene being monitored by the camera this was done by moving the camera. During the time before the camera was moved the SAD value was around 1.87 and when the camera was moved the SAD value was around 2.2. If the threshold for detection was fixed around the value less than 2.2 it will continuously detect motion after the camera stop moving.

To overcome this problem the same solution that was applied to the correlation algorithm will be used. The variance value was computed after collecting two SAD values and the result is shown for the same test case in figure 4.7.4.3below.

Figure 4.7.4.3 Variance Values as Reference for Threshold

This approach solve the need for continuously re-estimate the threshold value. Choosing a threshold of 1*10-3 will detect the times when only the camera is moved. This results into a robust motion detection algorithm that can not be affected by illumination change and camera movements.

4.7.5 Actions on Motion Detection

Before explaining series of actions happen when motion is detected it is worth to mention that the values of variance that was calculated .whether it was above or below the threshold will be stored in an array, where it will be used later to produce a plot of frame number Vs the variance value. This plot helps in comparing the variance values against the threshold to be able to choose the optimum threshold value.

Figure 4.7.5 Actions on Motion Detection

whenever the variance value is less than threshold the image will be dropped and only the variance value will be recorded. However when the variance value is greater than threshold sequence of actions is being started .

As the above flow chart show a number of activities happen when motion is detected.

First the serial port is being triggered by a pulse from the PC; this

pulse is used to activate external circuits connected to the PC. Also a log file is being created and then appended with information about the time and date of motion also the frame number in which motion occur is being recorded in the log file. Another process is to display the image that was detected on the monitor. Finally the image that was detected in motion will be converted to a movie frame and will be added to the film structure.

4.7.6 Break and clear Process

After motion detection algorithm applied on the images the program checks if the stop button on GUI was pressed. If it was pressed the flag value will be changed from one to zero and the program will break and terminate the loop then it will return the control to the GUI. Next both serial port object and video object will be cleared. This process is considered as a cleaning stage where the devises connected to the PC through those objects will be released and the memory space will be freed.

4.7.7 Data Record

Finally when the program is terminated a data collection process starts where variable and arrays that contain result of data on the memory will be stored on the hard disk.

This approach was used to separate the real time image processing from results processing. This has the advantage of calling back these data whenever it is required. The variables that are being stored from memory to the hard disk are variance values and the movie structure that contain the entire frames with motion.

At this point the control will be returned to the GUI where the operator can callback the results that where archived while the system was turned on.

Next section will explain the design of the GUI highlighting each button results and callbacks.

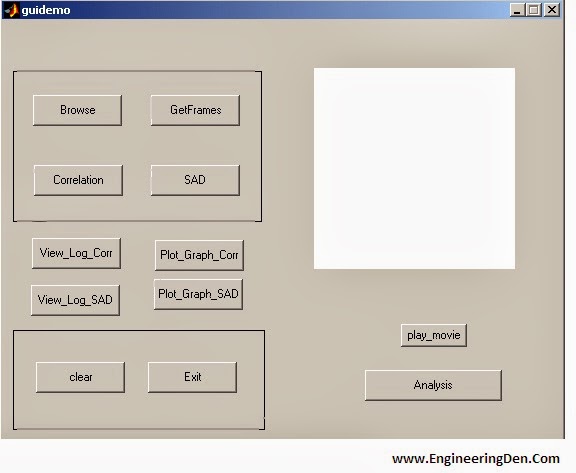

4.8 GRAPHICAL USER INTERFACE DESIGN

The GUI was designed to facilitate interactive system operation. GUI can be used to setup the program, launch it, stop it and display results. During setup stage the operator is promoted to choose a motion detection algorithm and select degree of the detection sensitivity.

Whenever the start/stop toggle button is pressed the system will be launched and the selected program will be called to perform the calculations until the start/stop button is pressed again which will terminate the calculation and return control to GUI. Results can be viewed as a log file, movie and plot of frame number vs. variance value. illustrate a flow chart of the steps performed using the GUI.

Figure 4.8 GUI flow Chart

The complete GUI code is included in the appendix.

Figure 4.9 GUI Layout Design

The GUI as shown in figure 4.9 was designed using MATLAB GUI Builder. It consists of two radio buttons, two sliders, two static text box, four push buttons and a toggle button. Sliders and radio buttons were used to prevent entering a wrong value or selection.The radio buttons are being used to choose either SAD algorithm or 2D cross correlation algorithm.

Both algorithms can not be selected at the same time.Sliders are used to select how sensitive the detection is, maximum sensitivity is achieved by moving the slider to the left.

The static text show the instantaneous value of the threshold as read from the sliders. When GUI first launch it will select the optimum value for both algorithms as default vale. Moving to the control panel Start / Stop button is used to launch the program when it is first pushed and to terminate the program when it is pushed again. Figure 4.10 show flow chart of commands executed when this button is pressed.

Figure 4.10 Start/Stop button flow chart

Whenever the Start/Stop button is first pushed it will set the value of the flag to 1 then it will check the value of the radio buttons to determine which main program to launch. A log file that contains information about time and date of motions with frame number is being opened when the log file icon is pushed.

The program is being closed and MATLAB is shutdown when the exit button is pushed. Whenever the show movie icon is pushed, MATLAB will load the film structure that was created before from the hard disk then it will convert the structure into a movie and display it on the screen at a rate of 5 frames per seconds. Finally the movie created will be compressed using the Indeo3 compression technique and will be saved on the hard disk under the name [film.avi]. Figure 4.11below show the commands executed when this icon is pushed.

Figure 4.11 Movie Button Callbacks

The last button which is the show plot icon loads the values of the variance to plot it against the frame number.

CHAPTER 7

CONCLUSION

We have presented an algorithm to detect motion in video sequences. It has a very low computational cost. After a period of calibration in which the threshold value is set, the algorithm is able to detect moving blocks in every frame by counting sign changes in the quadrants.

The number of moving blocks per frame allows determination of the periods of time with motion. It seems to be simulation in stimulant processor provides accuracy rather than real time motion detection using m files. So here we concluded that real time motion detection can be best implemented by our propose approach based on correlation network and sum and absolute difference

CHAPTER-8

FEATURE ENHANCEMENT

This paper describes 3D motion detection system. Five special designed neural networks are introduced. They are the correlation network, the rough motion detection network, the edge enhancement network, the background remover and the normalization network.

This project provides the object image clearly when it is in motion. The motion detection image is captured within 9-10 seconds. The same function can be implemented in moving cameras as for an easy surveillance. And the images captured within 2-4 seconds with the precise algorithm using a multiple input. (More crowded images). Since there were only two objects detected, it took only 8 seconds, for the processing of all three stages.